For those who believe that HTTP is ever faster than HTTPS in these days.

Note: This is an article that I translated from my original article written in Korean (2017-05).

I'm not an English native.

Chromium Dev has announced that from the 62 version of Chrome they'll label HTTP sites as 'Not Secure'.

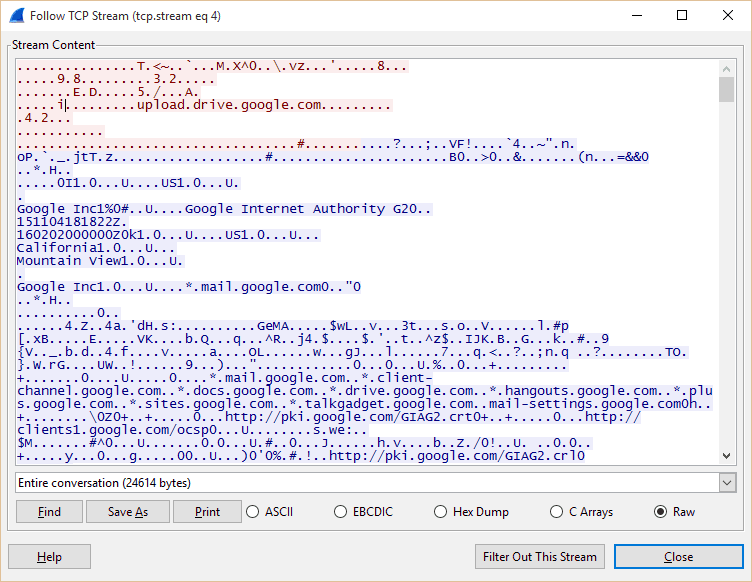

It is widely known that HTTP is vulnerable to leaks and attacks. No need to complicated MITM, and it's hard to find whether it is compromised data or not when entering the website, which is not DNSSEC signed. In order to treat these problems, HTTPS was introduced and implemented by the SSL (Secure Socket Layer). However, this simple implementation has also introduced some problems for both clients and servers.

Encryption costs

Before the communication starts, HTTPS needs time to do the handshake like the following: For more details see http://www.moserware.com/2009/06/first-few-milliseconds-of-https.html

The data can be securely transferred after both server and client exchange encryption algorithms and corresponding keys. These days, DHE-RSA (RSA: signature, Diffie-Hellman: key exchange) is mainly used, and also there is a mechanism called OCSP(Online Certificate Status Protocol) which queries the issuing authority for the validity of that certificate.

The good thing is that the communication will be fast once successfully completed the above steps. And Keep-Alive can reduce the costs because it keeps the session.

We can say "it is cryptographically secure" when the time taken to process the encryption is greater. But the performance matters. If every request requires this handshake stuff in order to communicate, we cannot say "it requires a low cost". So far (as of July 2017) this have been an obstacle to serving all pages in HTTPS, so this was the fundamental reason why people think "HTTPS is so slow and requires high-performance machines".

HTTPS in productions

But the computer world has changed. The performance of machines these days are way better than before while there are no more new things with TLS for HTTPS encryption. See the following benchmarks doing OpenSSL speed RSA without multi:

Intel XEON 5130 (Mac Pro 2007, similar to i5-3570K)

sign verify sign/s verify/s

rsa 512 bits 0.000148s 0.000010s 6778.9 96769.7

rsa 1024 bits 0.000499s 0.000029s 2005.4 34371.6

rsa 2048 bits 0.003225s 0.000100s 310.1 10036.3

rsa 4096 bits 0.023140s 0.000367s 43.2 2727.1

Intel Core i7-2600 (2011)

sign verify sign/s verify/s

rsa 512 bits 0.000054s 0.000004s 18556.8 267823.0

rsa 1024 bits 0.000158s 0.000010s 6317.2 99584.9

rsa 2048 bits 0.001123s 0.000034s 890.4 29142.7

rsa 4096 bits 0.008168s 0.000124s 122.4 8035.9

CPU performance has got really better in the last few years, this has proven to be of great benefit to the encryption. From the companies around:

On our production frontend machines, SSL/TLS accounts for less than 1% of the CPU load, less than 10 KB of memory per connection and less than 2% of network overhead. Many people believe that SSL/TLS takes a lot of CPU time and we hope the preceding numbers will help to dispel that. -- Adam Langley, Google

We have deployed TLS at a large scale using both hardware and software load balancers. We have found that modern software-based TLS implementations running on commodity CPUs are fast enough to handle heavy HTTPS traffic load without needing to resort to dedicated cryptographic hardware. -- Dough Beaver, Facebook

Elliptic Curve Diffie-Hellman (ECDHE) is only a little more expensive than RSA for an equivalent security level… In practical deployment, we found that enabling and prioritizing ECDHE cipher suites actually caused negligible increase in CPU usage. HTTP keepalives and session resumption mean that most requests do not require a full handshake, so handshake operations do not dominate our CPU usage. We find 75% of Twitter’s client requests are sent over connections established using ECDHE. The remaining 25% consists mostly of older clients that don’t yet support the ECDHE cipher suites. -- Jacob, Hoffman-Andrews, Twitter

**SSL/TLS is not computationally expensive any more.

Encryption costs on large file transfers

Many people consider sending file hashes and then serving files in HTTP because they think serving files in HTTPS requires high-performance machines, but we need to think more. As on "Yes, python is Slow, and I Don't Care" article shows us that CPU is way faster than networks or disks. Well, let's see. Most server-class network adapters can handle up to 10Gbps, even for large "single" instances. What this number means is that it doesn't matter when you really need to send large files to clients, since the physical devices like SSDs and network adapters can't handle those amount of demands. So with those ideas, it does not increase both the number of traffics a server can process and CPU usages, even if you have planned to use high-performance parts like 10Gbps PCI Express SFP+ NIC or PCI Express SSD. This is so simple: it is only "100 connections" with 10Gbps network adapter and 100 connections with 100Mbps bandwidth, by some estimates.

On the other hand, sending file hashes and then serving files in HTTP is inefficient and considered a bad thing because it needs to establish 2 TCP connections under different protocols. You may think that it needs 4 connections in real since TCP establishes a two-way connection between a server and a single client. What this number means is that TCP establishment overhead is 2x compared to an HTTPS connection, requires 2x RTT. In the paragraph above, I said 100 simultaneous download requests, so let's see, we can say it needs 200 TCP connections, so 600 RTTs.

No one ever said about network bandwidth and disk performance, most people are all focused on CPU performance, when talking on large file transfers. It does not seem to make sense to say that it requires high loads because not only large file transfers but also CPU should do that.

Most of cloud and CDN service providers such as Google Drive, Dropbox, iCloud, OneDrive, Cloudflare, etc has already been serving files from small to large in HTTPS, so that means that CPU does not matter because the real reason is in the network bandwidth.

Google, SPDY, and HTTP/2

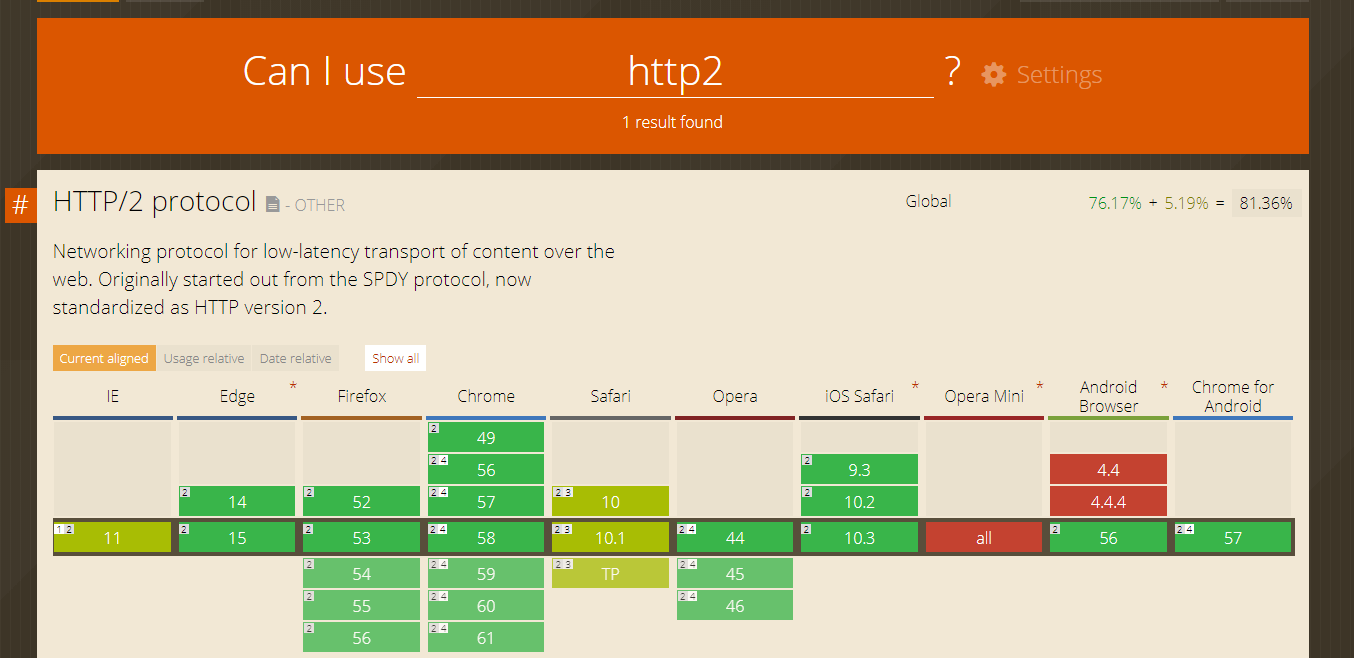

In 2012, Google announced project SPeeDY (SPDY), which uses multiplexing and prioritizing under the TCP connection, so makes the web faster. After a few years (as of March 2015), HTTP/2 implementation specification was officially standardized in response to Google's HTTP-compatible SPDY protocol.

As the name of HTTP/2 implies, in the beginning, it was also designed for HTTP, but most major web browsers have added support for HTTP/2 in only secured connection, this connotes that HTTP/2 is designed in order to power HTTPS.

HTTP/2, for the faster web, really seems to be designed for HTTPS. HTTP/2 is binary transfer protocol, whereas HTTP/1.1 is plaintext transfer protocol, if we think about this carefully, communicating on a single connection bring great benefits to HTTPS, not much for HTTP.

In the real, there have been many web changes since HTTP/2 has become more popular, like the number of websites supporting HTTPS, based on W3Techs statistics in August 2018, HTTP/2 is used by 29.0% of all the websites. HTTP/2 has great growth potential, and because many CDN services around the world such as CloudFlare, MaxCDN, and Fastly support HTTP/2, many people around the world can also use HTTP/2 connection.

HTTP's security problem

In Feb 2018, the biggest Korean web portal NAVER has marked as "Not Secure" website because they have been served their website as the only HTTP. In response to this, they said, "Our website's landing page does not contain any kind of private data, only public data, and we are serving authentication and search engine page as HTTPS". Let's talk about the problem on here.

This is also one reason why DNS matters: DNS was designed in a simple way like when client saying "I need IP addresses v4(A) for xxx.xxx", the server responds "The IP addresses v4(A) for xxx.xxx are [x.x.x.x, x.x.x.x, ...]". Because of this simple way, the security layer on the DNS was not considered to design, and because of the implementation, DNS produced a bunch of security-related problems. Nowadays, there is a technology called DNSSEC, which verifies that a domain name points to the correct web server, however, fundamentally, the problem has not been solved yet.

So, let's think: why we need an encrypted connection even though we do not transfer privacy data? this refers to the internet censorship, which was almost universally censored. Korea, for example, has already censored HTTP connections and checks the Host header by using DPI(Deep Packet Inspection), in order to block illegal websites in the country. The problem starts here, the government can surveil connections, from that they can know the internet activities and user's behavior. For example, if a person requested news.naver.com and then requested a subpage subsequently, they can mark a user and check what page that user has visited. This also applies to DNS. So, the real problem is internet censorship, all users' have the right to be protected and it is not good if there is any way for some to see it.

Let's back to the first paragraph and I'd like to say: for these reasons, this is why we must use HTTPS for every website we make. they, NAVER, said their landing page does not contain any sensitive data, however, not only landing page but also all subpages from their landing page is served with HTTP, so this affects to internet censorship by giving hints. Further, there is a chance that a hacker can manipulate the page in order to compromise.

DNS problem

As I mentioned above, DNS itself is not encrypted by default, so everyone who can monitor your traffic. In order to help to mitigate these situations, there are some projects that can secure all the way to DNS servers:

- DNSCrypt

- DNS-over-HTTPS from Google Public DNS

Certificates are free

Certificates have been much costed, and this was one reason people don't use HTTPS. In the past, we had to use Trusted root authorities like Comodo, VeriSign, Symantec, GeoTrust, Thawte, etc, but many internet service corporations like Cisco, Google, Mozilla, Fastly, etc have thought that they would want people to use HTTPS everywhere, and so they launched Let's Encrypt. Let's Encrypt issues free certificates for everyone, so now every dev and ops can get a certificate for free, and moreover, this is now the biggest effect to HTTPS deployments.

And because ACME(Automatic Certificate Management Environment) has announced, it also delivered some automated certificate issue projects like certbot, or Golang's internal library. In order words, ACME makes it easy for all users to issue HTTPS certificates, rather than traditional complex and troublesome HTTPS certificate issuing methods, which makes people force not to say like "Issuing HTTPS certificate is so hard" or "the cost of the certificate is so high".

Move to HTTPS

I think not a lot of people can believe what this article is saying about. This is the test of HTTP vs HTTPS by Let's Encrypt: https://www.httpvshttps.com/. If you are using web browser that supports HTTP/2 I guarantee you can see HTTPS is way faster than HTTP. The difference increases when you refresh that page because of the session resumption feature of HTTP/2. There is also a benchmark on the official Go website: https://http2.golang.org/gophertiles. In this page, you might see a different kind of test. Like the first one, you can see HTTPS is way faster as well. Right, these tests in real are not about HTTP vs HTTPS, it is HTTP/1.1 vs HTTP/2. However, please be reminded that on every web browser, HTTP/2 is ONLY supported on the top of SSL/TLS.

There is no big deal while moving to HTTPS. But you just have to note that some requests from HTTPS to HTTP are prohibited by the web browser policy, like when you load a script, which is from HTTP page, from an HTTPS page. To move gracefully, the movement operation must be done after a thorough review and staging testing. As of 2018, most major web servers support HTTP/2: Apache is supported by mod_h2 module from 2.4.12, nginx from 1.9.5 (backed by SPDY in older version), and node.js from 5.0.

If you are using major CDNs such as Akamai, Cloudflare, AWS CloudFront, Fastly, etc you don't have to do anything. yay!

HTTPS(TLS) is not an issue related to the performance. It is mandatory, not optional. The times are changing fast. Not only Google, Facebook, and Twitter, but also websites, that don't have to worry about leaking personal information when not logged in, like Github, Booking.com, and YouTube have HTTPS connections by default.

In January this year (2010), Gmail switched to using HTTPS for everything by default. Previously it had been introduced as an option, but now all of our users use HTTPS to secure their email between their browsers and Google, all the time. In order to do this, we had to deploy no additional machines and no special hardware.

-- Overclocking SSL, 25 Jun 2010, Google

References: